By Beth Williamson

With the rise of AI, experts, analysts, businesses, and end users continue to analyze the risks and benefits of what is undoubtedly a transformative technology.

Although the sentiment around AI is mixed and the outcomes are still unknown, we do know that data privacy, an already known risk, is heightened by AI.

“As artificial intelligence evolves, it magnifies the ability to use personal information in ways that can intrude on privacy interests by raising analysis of personal information to new levels of power and speed.”

Cameron Kerry, Brookings Institute

AI: A danger to human rights?

The Internet came to be known by many as mainstream in the late 1990s; just a decade later, in 2011, the United Nations declared internet access a human right, stating that “the internet has become a key means by which individuals can exercise their right to freedom and expression.”

Since then, the adoption of AI technology has more than doubled in use since 2017;1 it has become apparent that protecting personally identifiable ((PI)) data and ensuring data privacy is a significant concern for all internet users.

To combat this concern, data standards such as GDPR, CCPA and CPRA have been established, allowing consumers to determine how businesses use and alter their personal information data.

Although by no means perfect, these laws have provided some controls over an individual’s data. With the onset of AI, protecting the individual’s privacy and data is becoming ever more complex and will further challenge the standards and/or regulations currently in place.

Companies that implement best practices and continue to innovate on this front will have a greater likelihood of staying ahead of the regulatory landscape, thereby reducing their risk profiles.

Data privacy is often associated with AI models. As AI models depend on data quality to deliver relevant results, this technology’s success hinges on privacy protection being integral to the design.

However, without the proper controls in place, there is the potential for users’ input information to appear in model outputs in a form that makes individual users identifiable.

Therefore, it is understandable that users are wary of automated technologies that obtain and use their data, which may include sensitive information.

Additional privacy risks that could be exacerbated by AI include data exploration, identification and tracking (e.g., data stored by a self-driving car), inaccuracies and biases (e.g., demographic errors tied to facial recognition) as well as prediction (e.g., machine-learning algorithms based on a user’s internet and social media searches).2

Responsible AI can drive profitability

Privacy concerns are not being disregarded. According to a McKinsey survey,3 more than 1,300 business leaders and 3,000 consumers globally “suggest that establishing trust in products and experiences that leverage AI, digital technologies and data not only meets consumer expectations but could also promote growth.”

Further research indicates that organizations that are best positioned to build digital trust are also more likely than others to see annual growth rates of at least 10 percent on their top and bottom lines.

Digital trustworthiness: Part and parcel of broader risk management

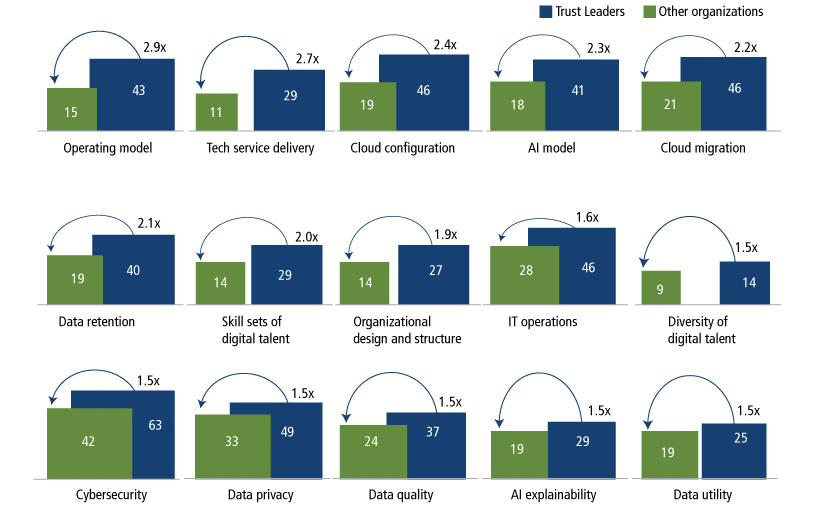

McKinsey’s global survey on digital trust also illustrates that organizations demonstrating leadership on the topic of digital trust are twice as likely to mitigate a variety of other digital risks.

This echoes the Calamos Sustainable Equities Team’s thesis that companies with strong initiatives aimed at risk mitigation across their material nonfinancial impact areas will often demonstrate strong governance and controls across their financials.

Digital trust leaders are often twice as likely to mitigate a variety of digital risks

% of respondents

Source: McKinsey & Company, “McKinsey Global Survey on Digital Trust,” 1,333 C-suite and senior executives responsible for risk and technology, May 2022. www.mckinsey.com.

Responsible AI Case Study: Microsoft

Within the Sustainable Equities team’s investable universe, we believe Microsoft (MSFT) is one company at the forefront of responsible AI. Microsoft seeks to protect consumer data for its own clients as well as provide solutions to the marketplace broadly.

According to the company, Microsoft ensures privacy while using AI through Azure differential privacy, which “protects and preserves privacy by randomizing data and adding noise to conceal personal information from data scientists.”4

Differential privacy enables researchers and analysts to extract useful insights from datasets containing personal information and offers stronger privacy protections by introducing “statistical noise.”

Microsoft is also working to expand its product offerings to include a suite of data privacy software tools, including Purview and Priva, to help other organizations identify and protect against data privacy risks.

1 McKinsey & Company. “The state of AI in 2022—and a half decade in review,” December 6, 2022, www.mckinsey.com.

2 Western Governors University, “How AI Is Affecting Information Privacy and Data,” September 29, 2021, www.wgu.edu.

3 McKinsey & Company. “Why digital trust truly matters,” September 2022, www.mckinsey.com.

4 Microsoft, “Differential Privacy – Microsoft AI Lab,” (n.d.), www.microsoft.com.

Original Post

Read the full article here